Building Better AI Models with Medical Experts and Linguists

Countless articles discuss how AI can assist health professionals in their practice — not to mention those that speculate it could replace them altogether. Taking the topic from the opposite perspective, we want to share how health practitioners and linguists helped us develop AI models and improve our experiments in medical information retrieval.

At Clinia, we develop health AI solutions that help health practitioners and patients quickly find reliable medical information—from clinical guidelines to peer-reviewed research.

Early on, we realized something fundamental: to build trustworthy AI models for information retrieval in healthcare, we couldn’t rely solely on the machine learning developers, NLP linguists, and data quality engineers of our R&D team. In other words, technical expertise is not enough. If we want to build reliable, strong models, medical expertise has to be part of the process from the start. That’s why we brought health practitioners (General Practitioners, specialists, and nurses) directly into our model development work, not only as consultants but as annotators who shaped the data our models learn from.

Working with them taught us far more than we expected — and we think these lessons could benefit anyone building health applications.

For a layman, type 1 diabetes vs. type 2 diabetes, viral pneumonia vs. bacterial pneumonia, or ischemic stroke vs. hemorrhagic stroke are simply pairs of similar labels. For a health practitioner, however, these distinctions correspond to completely different care pathways. Without clinical expertise, a response to a medical query can appear technically correct while missing the practical realities of diagnosis and treatment.

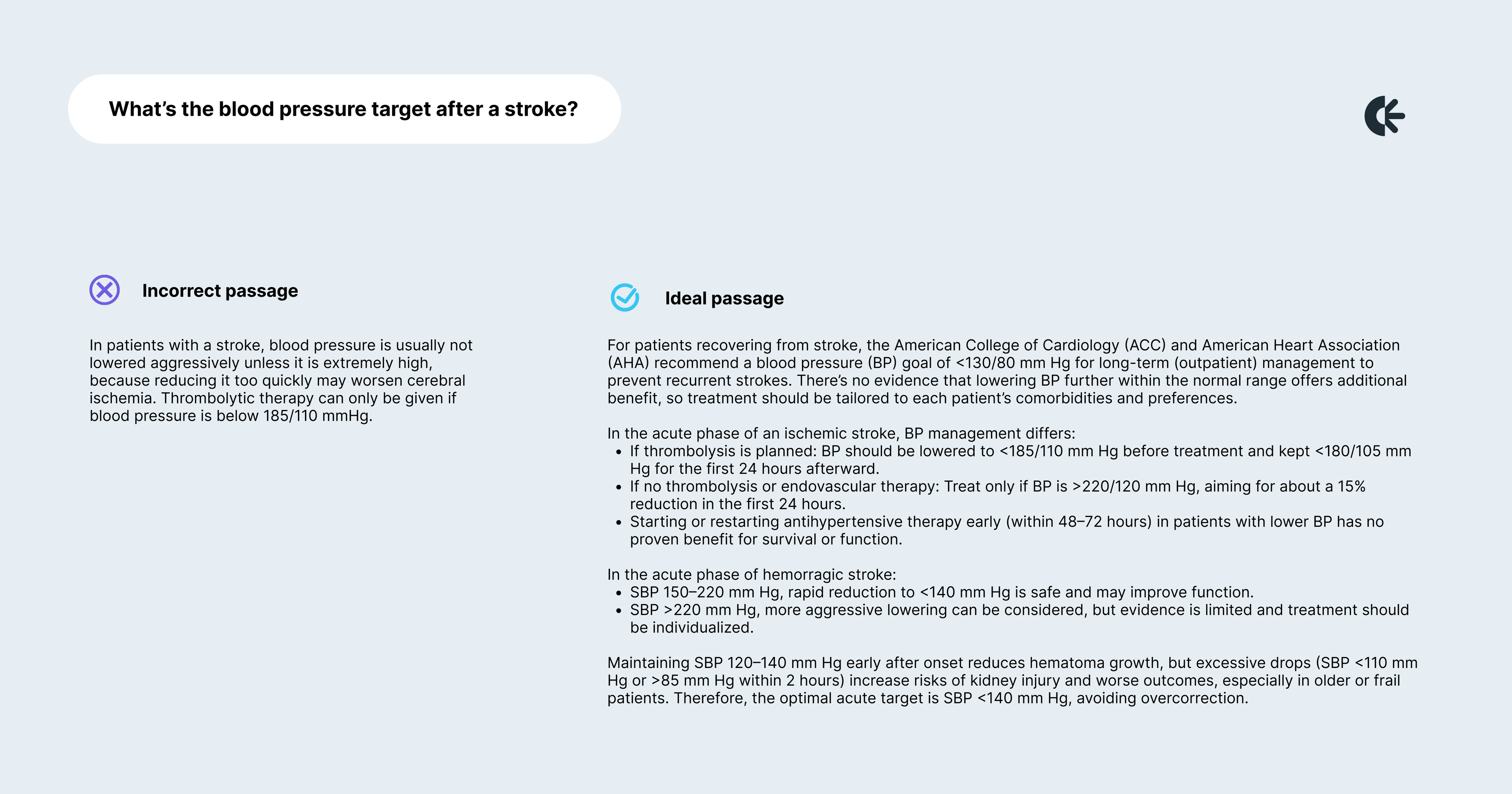

When we use the term stroke, for instance, it usually refers to an ischemic stroke, which accounts for about 90% of cases in the United States, according to Healthline (May 19, 2023). Other types, such as hemorrhagic strokes, represent 10% of cases. Most of the time, being familiar only with the first type of stroke, a lay annotator—or a generic model—might incorrectly validate the following query as matching the passage below:

The incorrect passage focuses narrowly on blood pressure thresholds for thrombolytic therapy in acute ischemic stroke, without addressing the long-term blood pressure targets recommended for stroke survivors or the distinct management strategies for different stroke types (ischemic vs. hemorrhagic). A physician would recognize that the query is about the overall blood pressure goal after a stroke, not just eligibility for thrombolysis. By presenting only acute ischemic stroke protocols, the passage is misleading: it could cause someone to apply acute treatment rules to chronic management or to other stroke types, which can be unsafe and clinically inappropriate. In short, the passage fails to distinguish between acute vs. long-term management and stroke subtypes, making it an unreliable answer to the query.

It takes a medical professional to determine whether a reasoning truly holds up — both in accuracy and in clinical relevance. By working with physicians, we learned to design an annotation framework that respects medical reasoning.

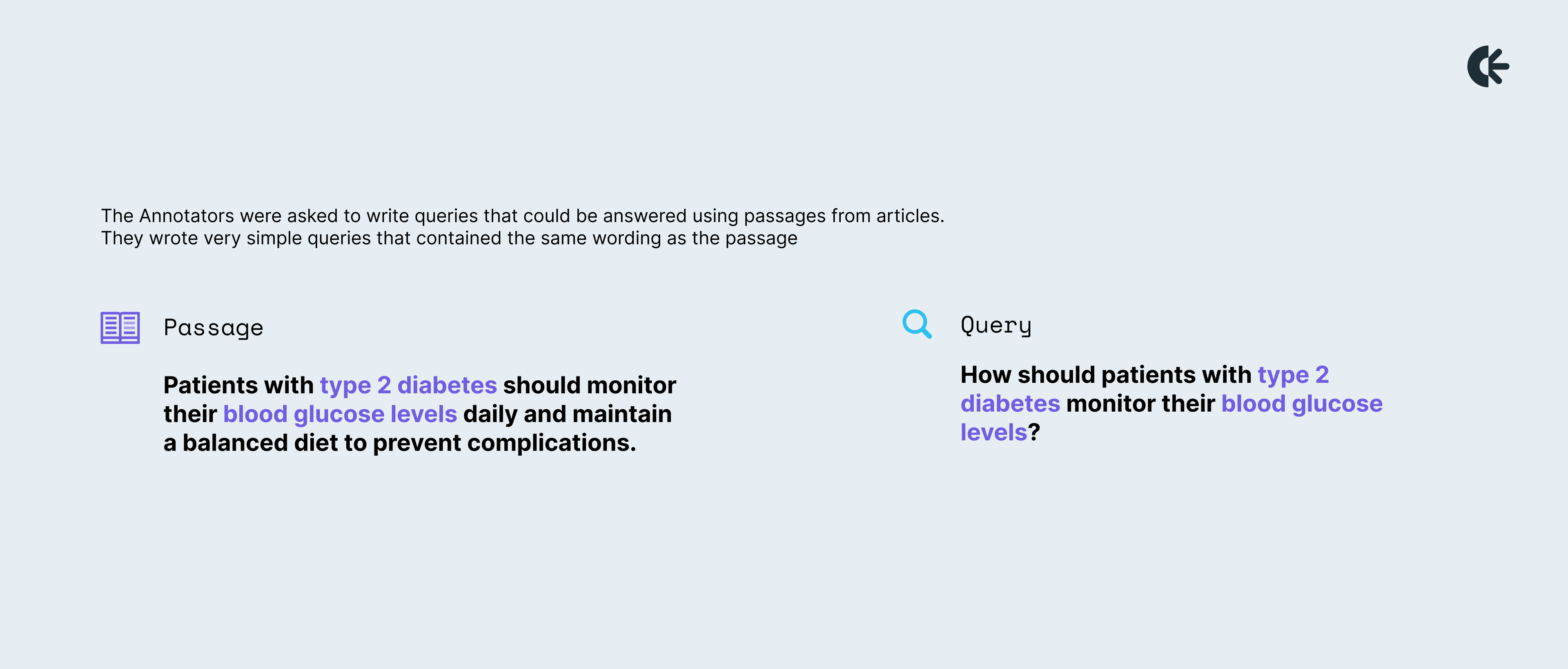

One of our very first tasks with medical annotators was to build a dataset of query–passage pairs where the passage is deemed relevant to answer the query. We asked doctors to read articles they would naturally consult in their practice to deepen their understanding of a topic, and to write queries that could be answered using passages from those articles.

For most of them, the first reflex was to write very simple queries that contained the same wording as the passage, for instance:

We quickly realized that these passages didn’t contain any real challenge and could be answered with simple keyword matching methods. Since we wanted to build more advanced models, we needed to go beyond basic approaches.

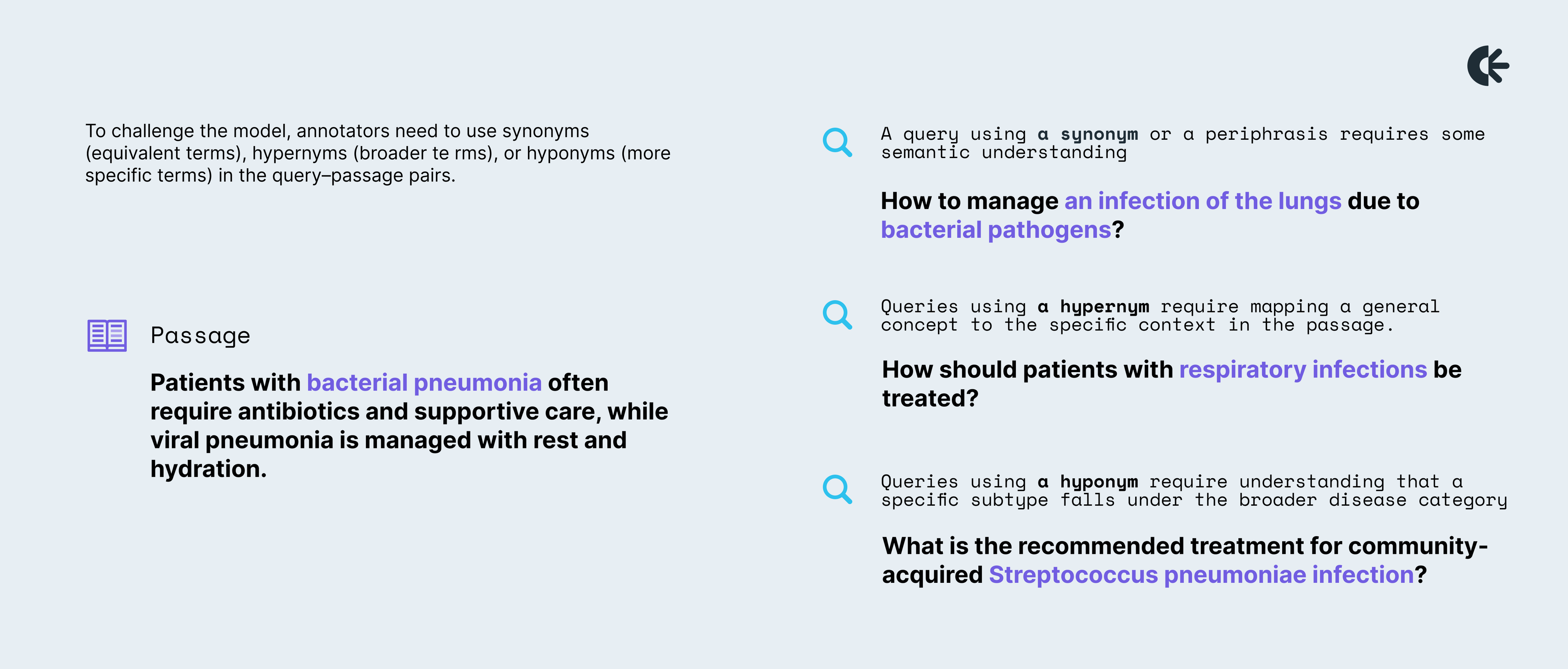

In a second iteration, instead of repeating the exact words, we asked the annotators to use synonyms (equivalent terms), hypernyms (broader terms), or hyponyms (more specific terms) in the query–passage pairs.

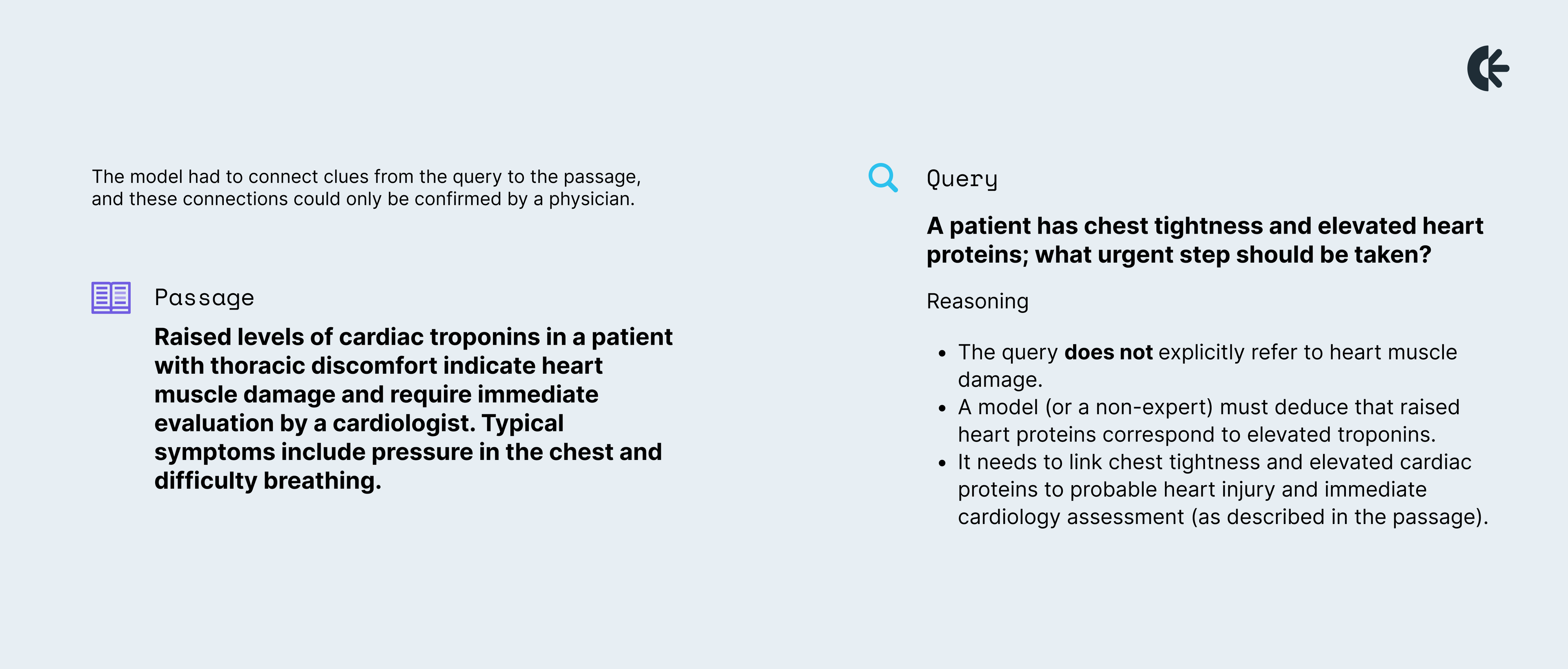

We realized that the first two iterations could be handled by our linguist annotators. To truly make a rigorous system, we needed tasks that only physicians—and advanced models—could tackle. In the third iteration, we raised the bar by designing queries that required reasoning and inference. The model had to connect clues from the query to the passage, and these connections could only be confirmed by a physician.

Only a medical professional can confirm that this chain of reasoning is accurate and clinically appropriate.

Building high-quality clinical datasets required both linguists and physician annotators, but we quickly realized that they excel at different aspects of the task. Doctors understand medicine, but annotation calls for a unique skill set, juggling text, semantic relationships, and tasks they are unaccustomed to. Linguists, on the other hand, are skilled at crafting nuanced queries and recognizing semantic relationships, but they lack the clinical knowledge to verify medical accuracy. At first, this gap was a challenge, but over time, we learned to leverage both areas of expertise by splitting the evaluation of passages into two dimensions: linguistic quality and medical accuracy. We applied this dual approach when evaluating RAG in an arena framework.

Linguists focused on crafting and assessing queries for clarity, nuance, conciseness, and semantic rigour, while doctors validated the clinical correctness of the passages and answers. This strategy allowed us to combine linguistic precision with medical reliability, ensuring that our datasets were both sophisticated and trustworthy.

One of the most important lessons was that involving physicians went far beyond improving datasets. Their perspectives fundamentally influenced our approach—shaping how we define model evaluation criteria, determine what constitutes meaningful results, and consider the end-user experience.

The value came not from doctors simply explaining medicine or engineers explaining AI, but from genuine collaboration: questioning assumptions, refining approaches, and iterating together. This process influenced both our data and our entire methodology for developing models. Physicians are right to hold AI to rigorous standards. Building trust required transparency about limitations, careful attention to feedback, and openness in our work. That trust enabled meaningful collaboration and will be essential for adoption in clinical practice.

The benefits of this collaboration are mutual: for clinicians, building a tool that provides doctors with quick, evidence-based answers they can genuinely trust is innovative and addresses what has long been missing. As for linguists, working on an information retrieval project offers insight into how models are trained, the data they require, and how their language expertise can directly improve model accuracy, clarity, and cultural nuance. Seeing how much their effectively incorporated feedback matters has been very motivating for them to stay involved, one of our medical annotators noted.

Ultimately, our work showed that when clinicians and linguists contribute directly to model development, the results go far beyond better datasets—they reshape the entire approach to AI in healthcare, which is essential for building tools that truly serve health professionals and their patients.